Advanced Facial Auto-Rigging

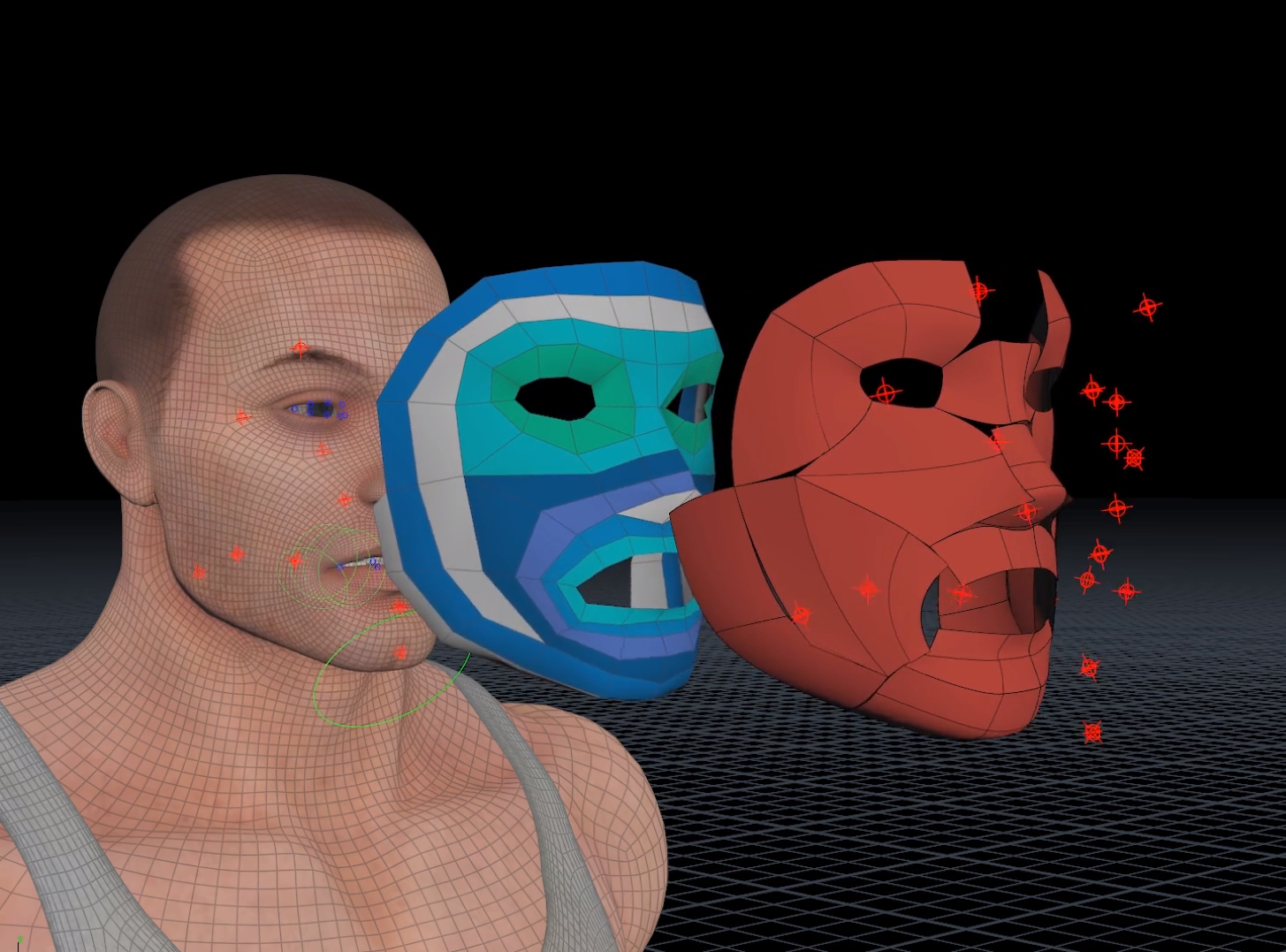

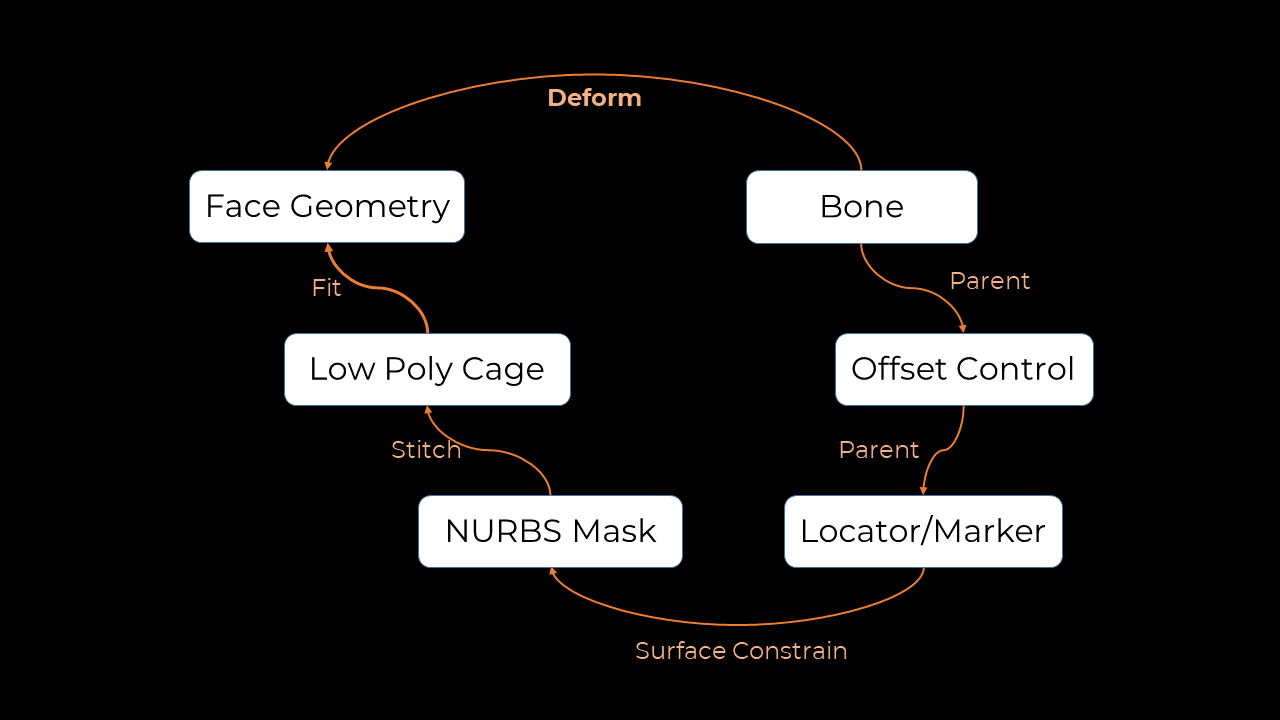

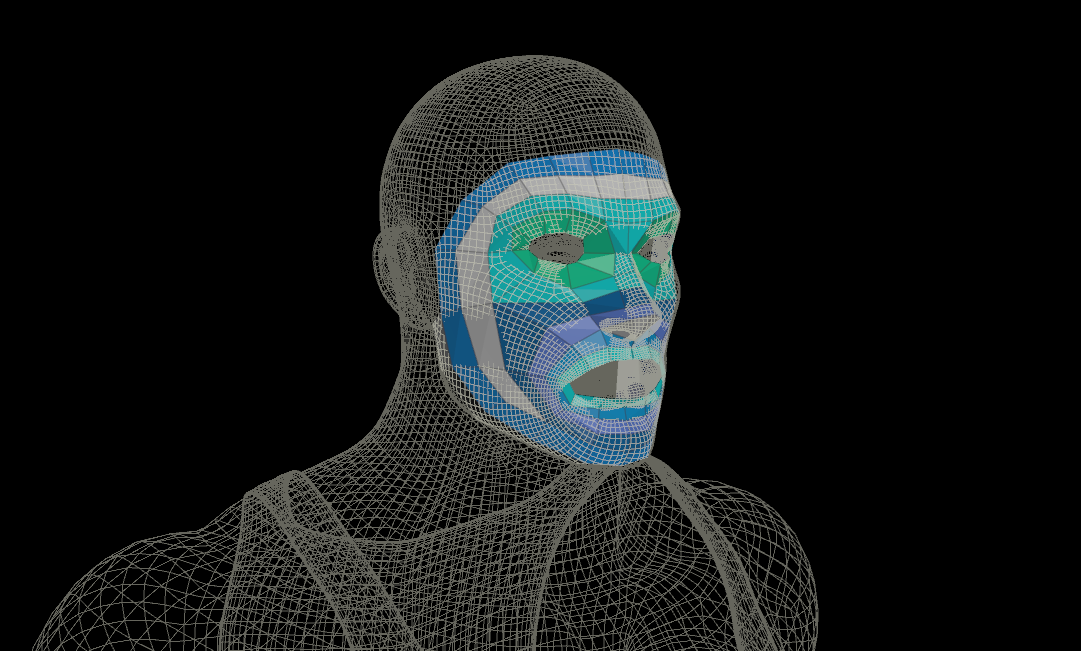

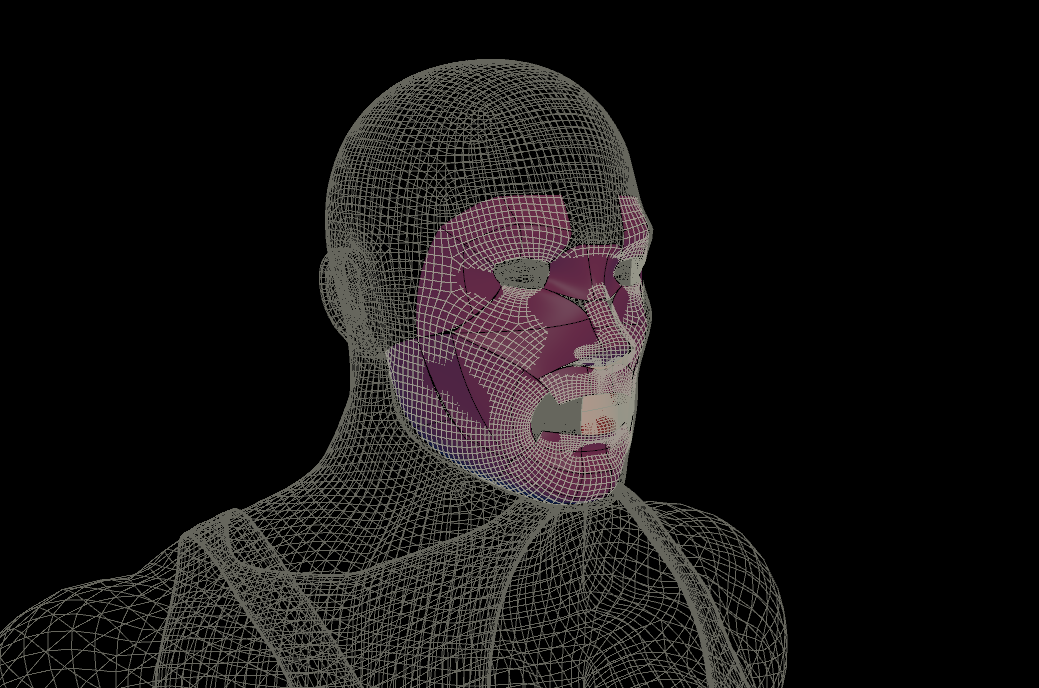

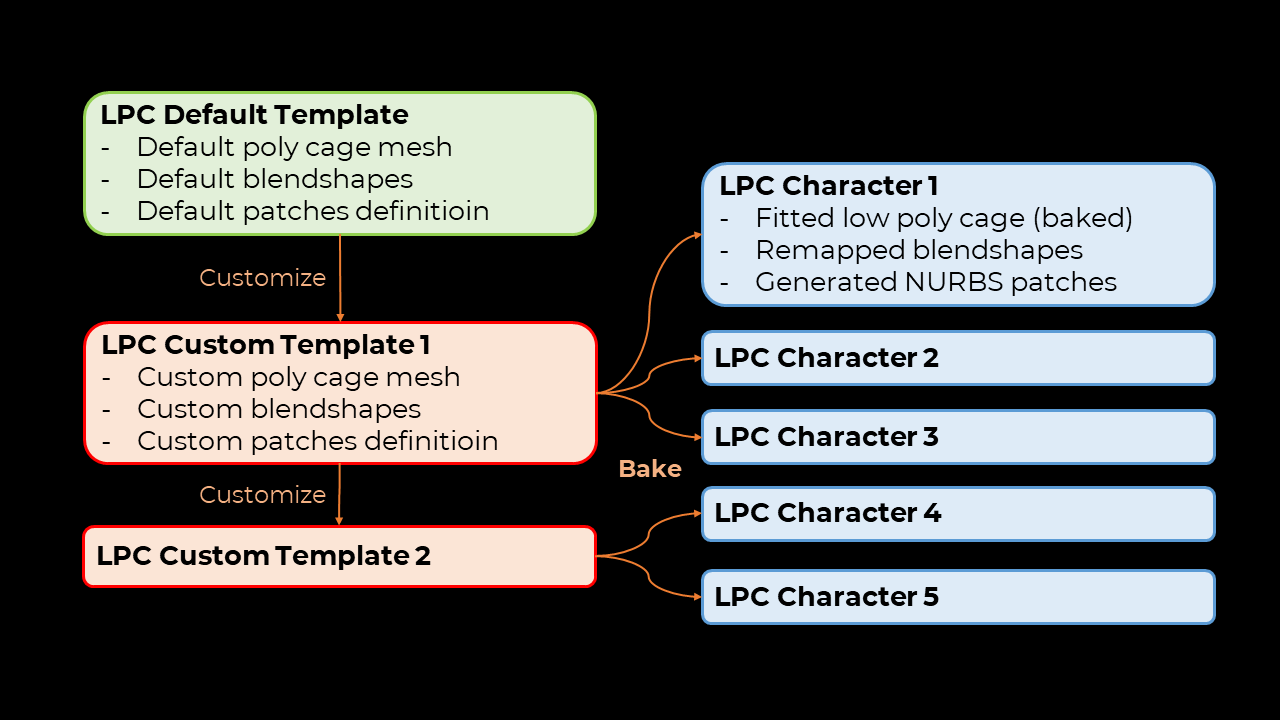

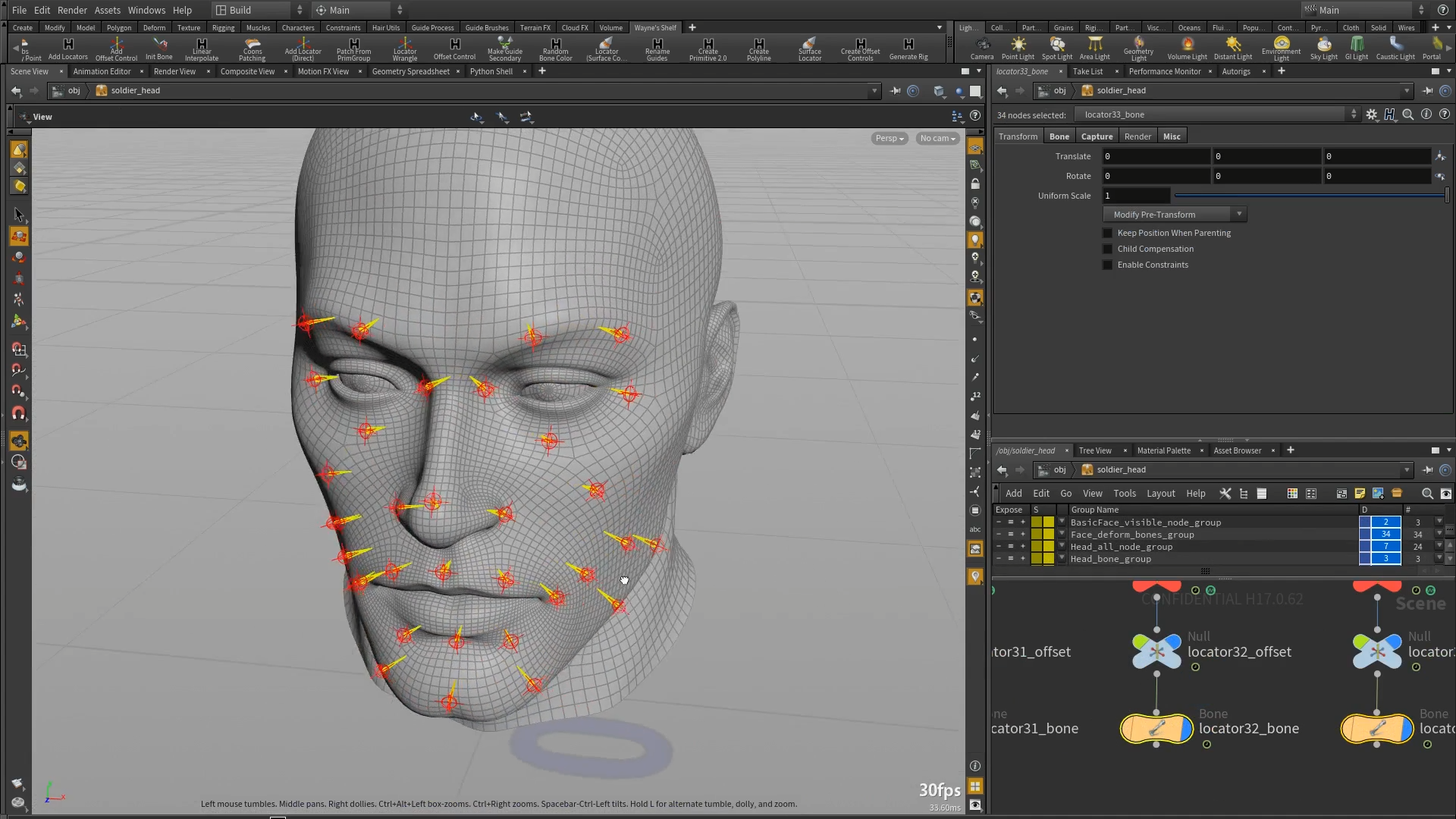

In Houdini 16.5, a basic facial auto rig, which I implemented, was included that allows users to add rigs for jaw and eyes. However, without a full face rig, the system is rather useless. Therefore, I designed and prototyped a full-face auto-rigging system for H17. This system is a FACS-based facial auto-rigging module integrated with Houdini's Autorigs. The solution, inspired by Jeremy Ernst's talk at GDC in 2011, automates the facial rigging process using a low poly face mesh. The use of low poly "cage" allows animation data and blendshapes to be transferable across all auto-rigged characters. Special patches are also attached to the character's face based on the low poly cage, which allows controllers to be moved in a confined uv space that best describes the movement of the muscles.